Understanding the Data Labeling Process: A Step-by-Step Guide

Understanding the Data Labeling Process: A Step-by-Step Guide

In the world of artificial intelligence (AI) and machine learning (ML), the success of a model depends heavily on the quality of its training data. Data labeling, the process of tagging data to make it usable for AI models, is at the heart of this endeavor. Whether you’re developing an autonomous vehicle, training a chatbot, or building a facial recognition system, understanding the data labeling process is essential for creating accurate, reliable, and high-performing models.

This blog offers a step-by-step guide to the data labeling process, outlining each stage from raw data collection to final quality assurance.

1. Data Collection

Before labeling can begin, you need a robust dataset tailored to your project’s objectives. The raw data can come in various forms, such as images, videos, audio recordings, or text documents.

Key Considerations:

- Relevance: Align the data with your project objectives.

- Diversity: Include varied examples to improve model generalization.

- Volume: Ensure you have enough training data.

Example: For a self-driving car project, you’ll need images of roads, pedestrians, vehicles, and traffic signs captured under different conditions.

2. Data Preprocessing

Once raw data is collected, preprocessing is necessary to prepare it for labeling. This step includes cleaning, organizing, and standardizing the data.

Steps in Preprocessing:

- Cleaning: Remove duplicates, incomplete records, or irrelevant data.

- Normalization: Ensure consistency in formats, such as resizing images or converting audio files to a common format.

- Segmentation: Break down large datasets into manageable chunks.

Outcome: A refined dataset ready for annotation.

3. Choosing the Right Labeling Tools

Selecting the right tools for data labeling is critical for efficiency and accuracy. Annotation tools vary based on the type of data and the level of detail required.

Popular Tools:

- Image Annotation: Labelbox, CVAT, Supervisely.

- Text Annotation: Prodigy, LightTag, TagTog.

- Audio Annotation: Audacity, Descript.

Key Features to Look For:

- User-friendly interface.

- Scalability to handle large datasets.

- Collaboration capabilities for team-based projects.

4. Defining Labeling Guidelines

Before annotators begin their work, clear and detailed labeling guidelines must be established. These guidelines ensure consistency and minimize errors.

Guidelines Should Cover:

- Label Categories: Define what each label represents.

- Annotation Rules: Specify boundaries, relationships, and attributes.

- Examples: Provide annotated samples for reference.

Example: In object detection, specify whether to include occluded objects within bounding boxes or not.

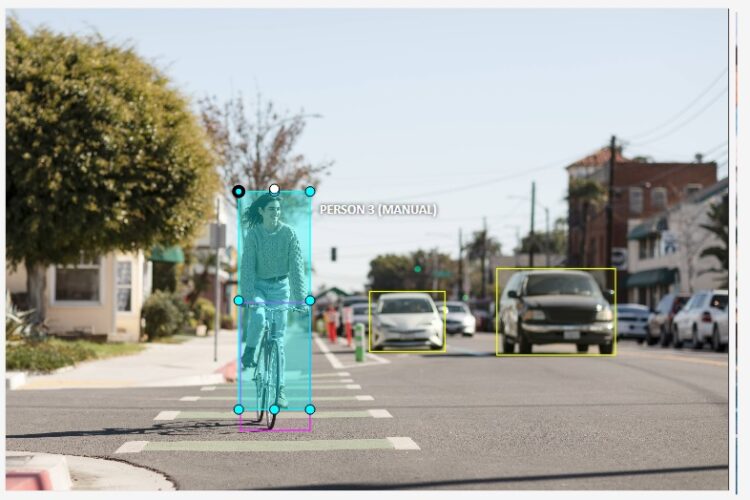

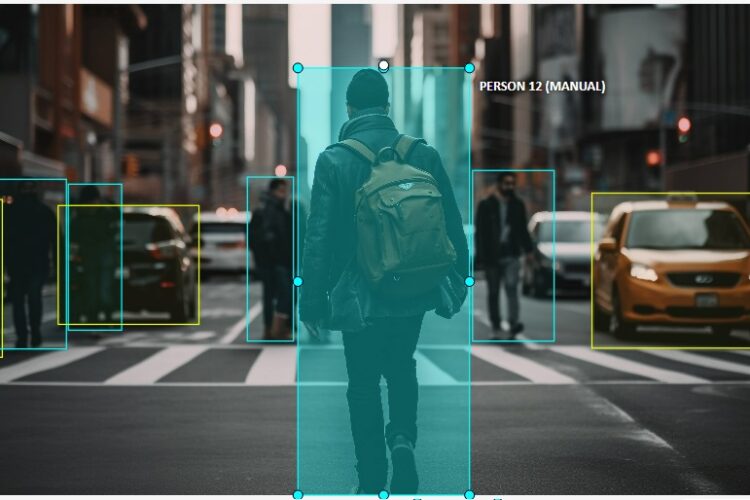

5. Annotation Process

The core of the data labeling process is the annotation itself. During this step, annotators apply labels to the data according to the predefined guidelines.

Techniques by Data Type:

- Image: Bounding boxes, polygons, or keypoints.

- Video: Frame-by-frame labeling or object tracking.

- Text: Entity recognition, sentiment tagging, or text classification.

- Audio: Speech-to-text transcription or sound event tagging.

Collaboration: Teams often use multiple annotators and reviewers to speed up the process while maintaining quality.

6. Quality Assurance

Ensuring the accuracy and consistency of annotations is critical to the success of the model. Quality assurance involves reviewing and validating the labeled data.

Steps for QA:

- Manual Review: Human experts check for errors and inconsistencies.

- Consensus Scoring: Cross-verifying labels by comparing annotations from multiple annotators.

- Automated Tools: AI-powered QA tools identify outliers or potential errors in large datasets.

Outcome: A polished dataset with reliable annotations.

7. Iterative Feedback and Refinement

Annotation is rarely perfect on the first attempt. An iterative process helps refine the labeled data for maximum accuracy.

Process:

- Annotators receive feedback on errors.

- Guidelines are updated to address ambiguities.

- New iterations of labeling improve the dataset quality.

8. Data Integration for Model Training

Once labeling is complete and quality assurance checks are passed, the annotated data is integrated into the AI model training pipeline.

Steps:

- Divide the data into training, validation, and testing sets.

- Feed the training data into the ML algorithms.

- Use the labeled validation set to fine-tune the model.

Example: An autonomous vehicle model uses annotated traffic data for training while validating performance on unseen road scenarios.

9. Monitoring and Maintenance

Even after deployment, data labeling doesn’t stop. Models require continuous updates with new data to adapt to changing conditions and improve performance.

Steps for Maintenance:

- Collect and annotate new data regularly.

- Retrain models with updated datasets.

- Monitor performance to identify areas for improvement.

Conclusion

The data labeling process is a foundational step in AI and ML development. Each stage, from data collection to monitoring, plays a critical role in building accurate, reliable models. By understanding and implementing a systematic labeling process, organizations can ensure their AI systems achieve optimal performance.

Partner with Experts for Seamless Data Labeling

At Outline Media Solutions, we specialize in providing high-quality data labeling services tailored to your project’s needs. With our expertise and scalable solutions, you can focus on innovation while we ensure your data is model-ready.